How to explore data in Kafka topics with Lenses - part 1

Lenses comes with a powerful user interface for Apache Kafka to explore historical or in motion data. Access data for debugging, analyzing or reporting easily

In this post we are going to see how Lenses can help you explore data in a Kafka topic.

Lenses comes with a powerful user interface for Kafka to explore historical or in motion data, for which you can run Lenses SQL Engine queries. This helps to quickly access data for debugging, analyzing or reporting but at the same time is not requiring being a developer to do so.

In addition, Lenses comes with a set of REST and Web Socket endpoints that makes integration with your Kafka data simple. We will see an example on how to integrate with Jupyter notebooks but also the JavaScript library to build frontend apps!

Lets get started!

Lenses attempts to identify your data types for the Key and the Value in every Kafka topic even if they are different types.

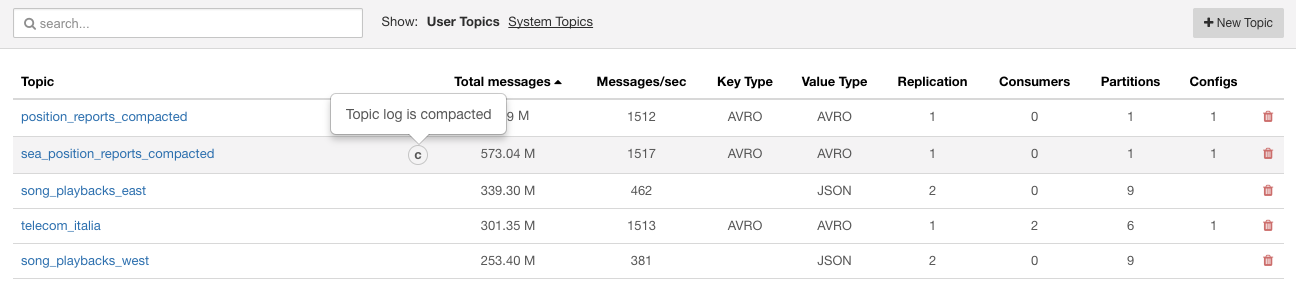

You can see the detected type from the topics lists:

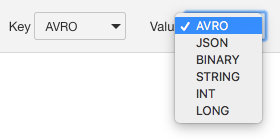

Even though auto detection kicks in, Lenses allows the user to specify the accurate types for key, values by selecting out of one of the default deserializers.

Lenses also supports windowed deserializers in case the data are produced by a Kafka streams application.

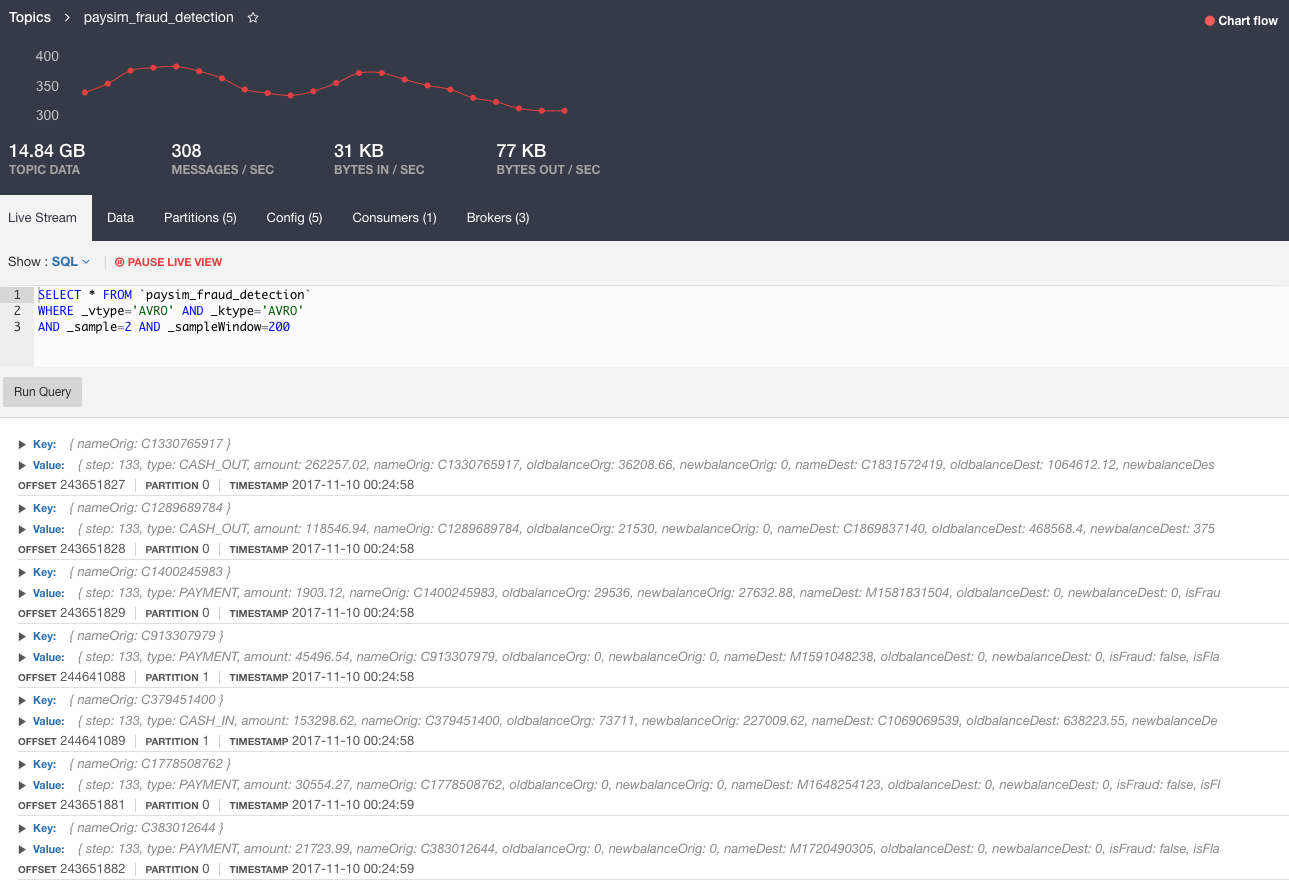

Once you get in the topic page, you land in the Live Stream view and provided that you have data being produced, you will be able to see the live stream bringing the data in your browser. This is the new data coming in to your topic:

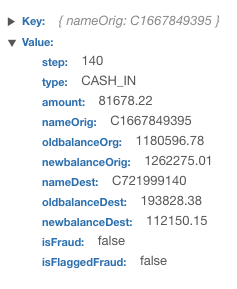

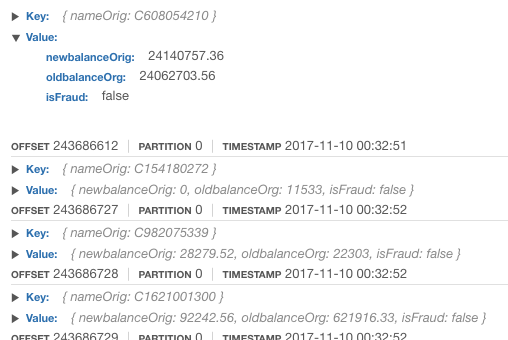

You can hover over your data and expand to see the fields or pause the stream:

The live stream applies a default query on your data but you can also edit the query and filter your data based on Kafka metadata, or apply functions and project fields.

Here is an example that we apply a simple filter for a specific partition:

Result:

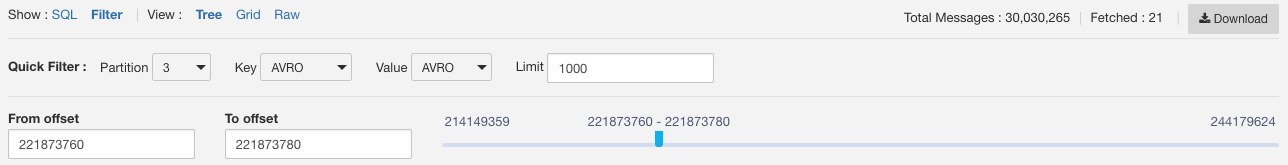

When you navigate to the Data view of the topic you will be able to view historical data.

By default Lenses will bring up to 1000 rows, which is a configurable value.

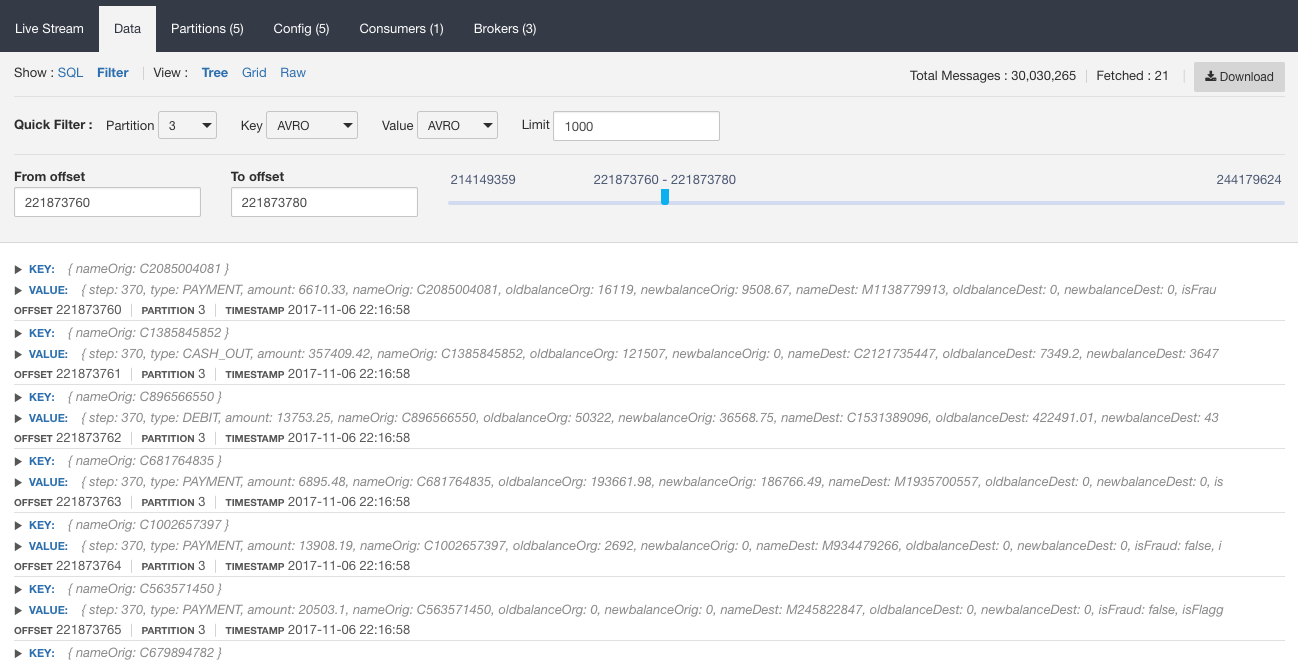

In this view you can either query data by executing SQL queries in Apache Kafka topics or by using the data controls provided by the Quick Filter.

By using the quick filter you can specify the partition and the offset range by dragging the offset slider:

The filter will apply the changes to your data immediately:

Using Lenses SQL Engine you can access the Key and the Value of the message but also its metadata. We saw above how to specify the partition. Here is an example on how to apply a time range:

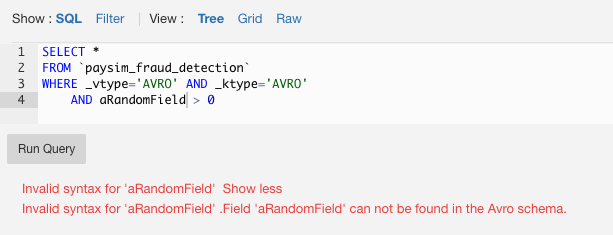

In case of AVRO data, SQL queries will also validate against your schema:

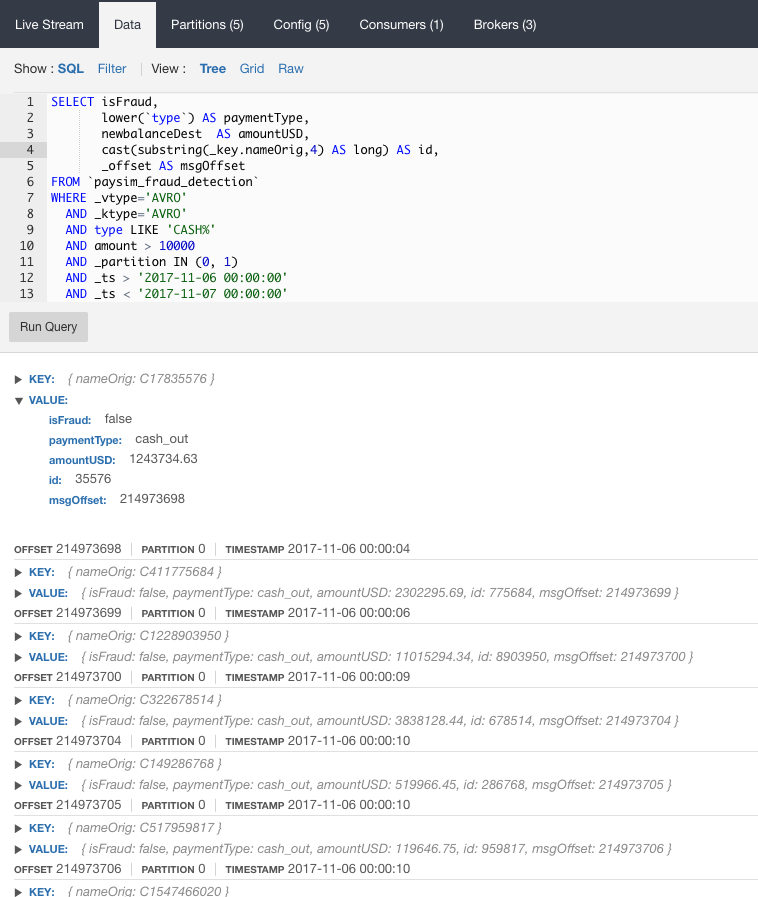

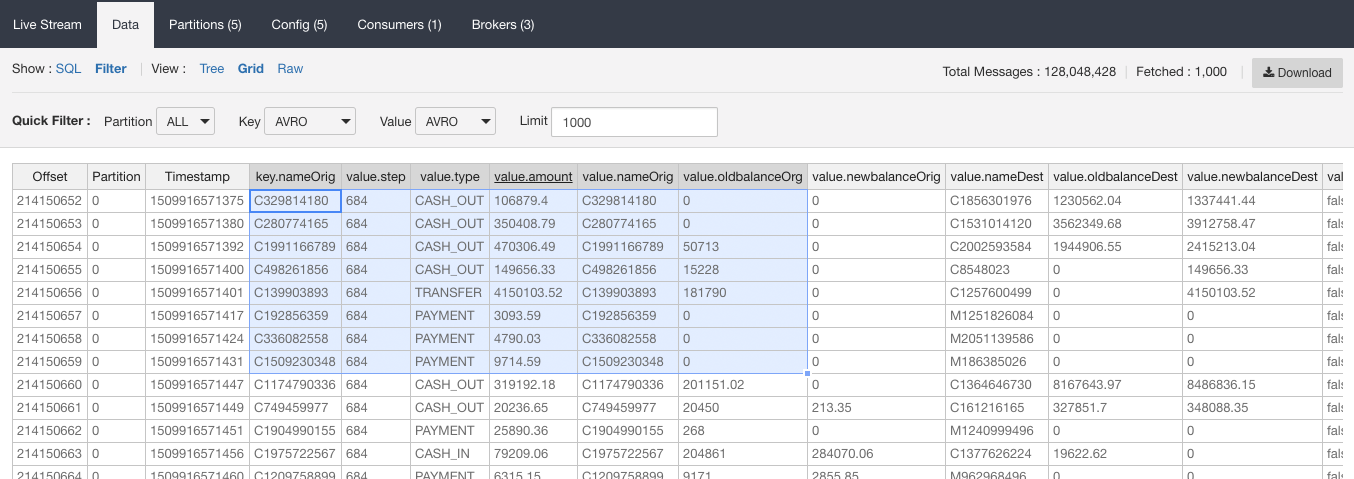

Lenses provides 3 different ways to explore your data : Tree, Grid and Raw. You can switch among the different views and each view will display the current data set.

We have already used the Tree view in the previous examples, so lets have a look at the Grid view.

In Grid, Lenses flattens the data in an excel-like format where you can sort the data set, move and re-order the columns, and here you can copy either the whole data set or part of it to your spreadsheets for example or any other relevant tool.

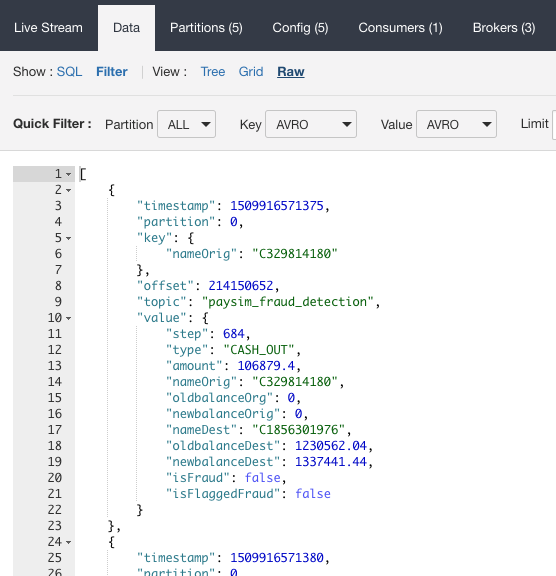

In Raw, Lenses gives the JSON like view of your data:

Once you’ve done your analysis and finalized your data set you cal also download the data

Lenses provides access to both historical and real time data, for which you can run Lenses SQL Engine queries.

In order to easily integrate your apps with Kafka, Lenses provides some easy APIs to do so via REST end points and Web Sockets.

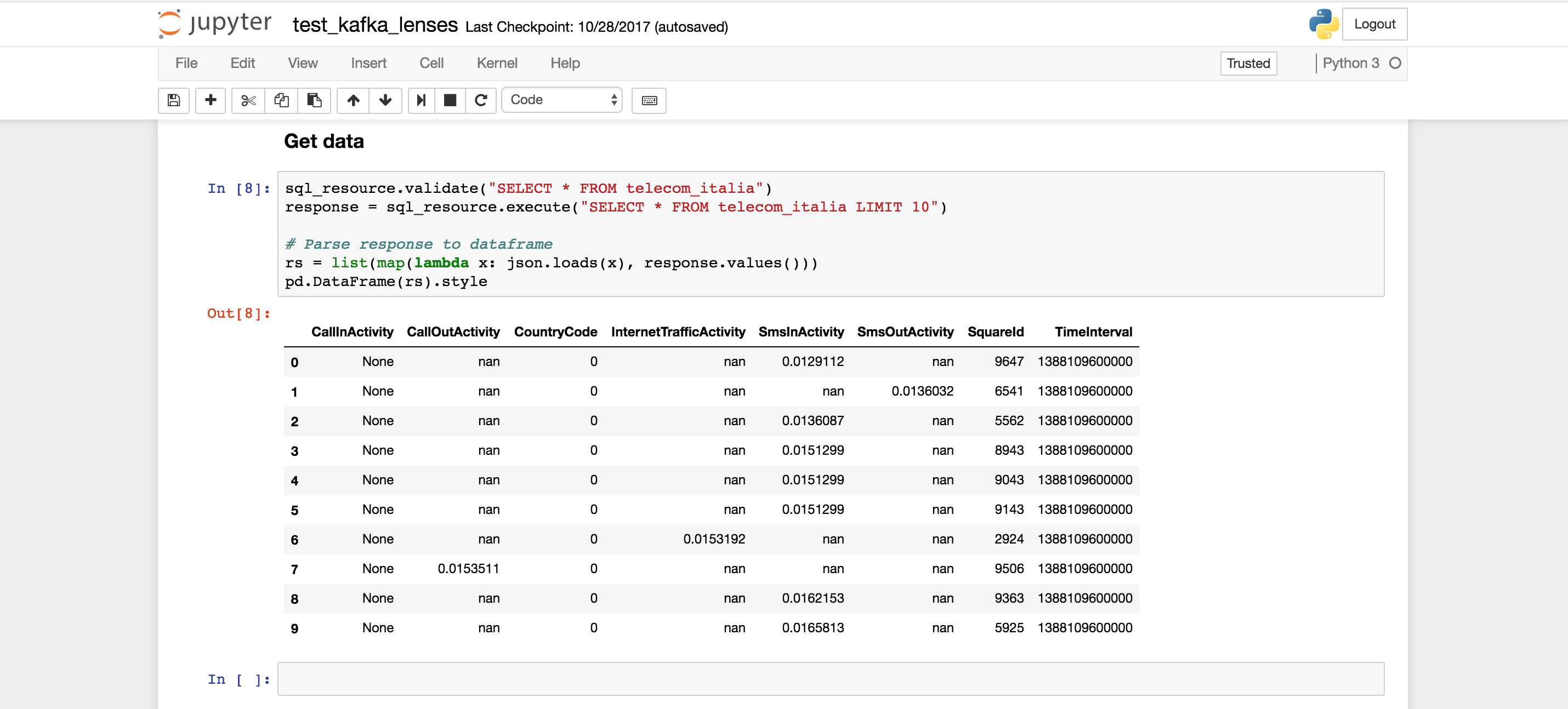

Here is an example of Jupyter integration via the Lenses APIs:

Lenses comes with a Redux/Reactjs library for the Web Sockets endpoints that supports the following Actions

You can subscribe and execute Lenses queries on your data via your JavaScript application. The full documentation for the JS library and the Web Socket api is available here.

We will also do a separate article on the Lenses supported integrations.

Lenses supports role based access via basic or LDAP authentication.

Several regulations around data protection, such as the most recent GDPR

or having sensitive data, is a common problem for allowing users to access data.

There is multiple ways we tackle this with Lenses, but you can quickly test this

by applying the nodata role to the authenticated user.

One of the most requested feature our users looking for, is a convenient way to explore their data in Kafka, but also have an easy way to collaborate between business users or data scientists that require the data. We believe that with Lenses we give the users a trivial way to deal with it but also exposing the feature via APIs for multiple applications to be able to hook into Kafka.

In next articles of this “how-to” series, we will present how to deal with these integrations, but also other features of Lenses that complete the end to end pipeline for real time ETL pipelines, such as stream processing, connect etc.

Blog: How to process data in Kafka with SQL - part2

Find out about Lenses for Apache Kafka

Lenses for Kafka Development The easiest way to start with Lenses for Apache Kafka is to get the Development Environment, a docker that contains Kafka, Zookeeper, Schema Registry, Kafka Connect, Lenses and a collection of 25+ Kafka Connectors and example streaming data.