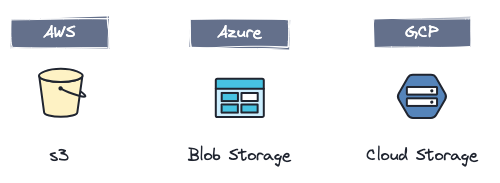

Overview of Cloud storage for your data platform

Having the ability to archive Kafka topic data is now essential. In this blog series, learn about the different Cloud Storage solutions available and their benefits

One of the most important questions in architecting a data platform is where to store and archive data. In a blog series, we’ll cover the different storage strategies for Kafka and introduce you to Lenses’ S3 Connector for backup/restore.

But in this first blog, we must introduce the different Cloud storage options available. Later blogs will focus on specific solutions, explain in more depth how this maps to Kafka and then how Lenses manage your Kafka topic backups.

The first cloud storage made available was AWS S3 - Simple Storage Service.

S3 offers industry-leading scalability, data availability, security, performance, and cost-effectiveness. With S3, you can store objects without ever worrying about infrastructure. Use it as you need it.

Some use cases that AWS S3 addresses:

Build a Data Lake

Run Cloud-Native Applications

Backup and Restore Critical Data

Archive Data at the Lowest Cost

Store data to train Generative AI models

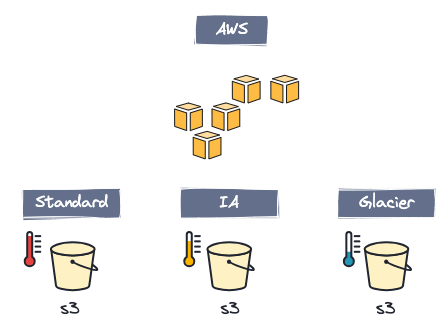

AWS offers different “Storage Classes”. Each being designed for a different storage access patterns and with different attributes. Here is a quick breakdown, however in the upcoming articles, we will dive deeper into each one:

S3 Standard

The default offering is for general purposes, for frequently accessed data. Website data, for example.

S3 Intelligent-Tiering

Automatically moves data to the most cost-effective tier based on access frequency. For example, processed data files will not be used for a while.

S3 Standard-IA

For data accessed less frequently but requiring fast access. For example backup data with a low Recovery Time Objective (RTO).

S3 One Zone-IA

Similar to Standard-IA, but is a single Availability Zone and costs 20% less than Standard-AI.

S3 Glacier Instant Retrieval

Low-cost storage for long-lived data that is rarely accessed and requires retrieval in milliseconds.

S3 Glacier Flexible Retrieval

Similar to Instant Retrieval but up to 10% lower cost for data that don't need to be retrieved immediately, data accessed 1 or 2 times a year, for example.

S3 Glacier Deep Archive

Lowest-cost storage that supports long-term retention and digital data preservation. Data that is stored for auditing purposes, for example.

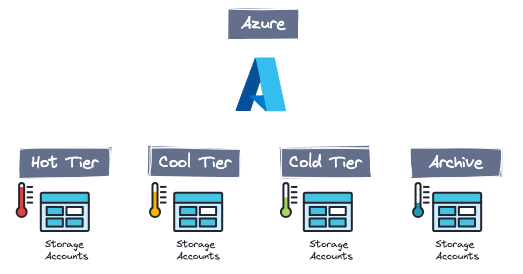

The Cloud Storage solution from Microsoft Azure is Blob Storage. The major difference with Microsoft’s offering is the Azure Data Lake Gen 2 feature, which we’ll explain later.

The data objects (files) are called blobs. There are different types of blobs including: “Block Blobs”, “Append Blobs” and “Page Blobs”.

A collection of Blobs is called a Container, similar to the standard bucket concept with AWS S3.

We call a set of containers “Storage Account”. For example, I can have a Storage Account for internal files that will only be accessed by my company personnel and another Storage Account for external access. These two accounts can be part of my data access strategy. Of course, this is an example, not a must-do.

We have the same concept of Storage Classes in AWS as the Access Tiers in Microsoft Azure.

Hot Tier

Optimized for storing data accessed or modified frequently, it is the standard tier—for example, used for website content.

Cool Tier

Data stored and not accessed or modified frequently take at least 30 days of stored data. In this case, the storage cost is lower than the standard tier {hot}, but the access cost is higher.

Cold Tier

Similar to Cool, but for less recent data (eg. 90 days instead of 30). It holds a lower cost for storing but a higher cost for access.

Archive Tier

An offline tier for storing data that will only be accessed occasionally. This is the colder tier, and the minimum number of days to store is 180.

Data Lake Gen 2 is a feature that can be enabled in blob storage. It is not a dedicated service but instead should be seen as a superpower on top of Blob storage that you can turn on.

Data Lake Gen2 capabilities include:

Hadoop-compatible access, designed to work with Hadoop and all frameworks that use Apache Hadoop Distributed File System (HDFS)

The hierarchical directory structure. You can organize objects in directories and nested subdirectories in much the same way as on your computer.

Optimized cost & performance. Because of its efficient access and hierarchical namespace capability, we can search for an object faster because Azure knows where to find the object. Avoiding the need to do a full scan.

The finer-grain security model supports Azure RBAC, and we can apply for permission on a directory or file level. All stored data is encrypted at rest using either Microsoft-managed or customer-managed encryption keys.

Massive scalability, Data Lake Gen2 doesn't impose any limits on account size, file size, or amount of data stored in the Data Lake. Individual files can have size from 1 kilobyte to multiple petabytes

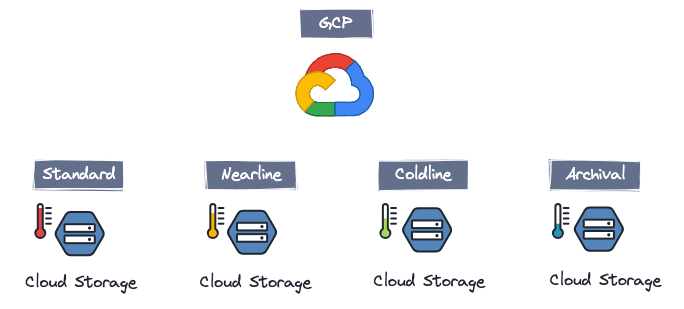

The Cloud Storage service from GCP is a managed service for storing structured or unstructured data.

Cloud Storage is integrated with all Google GCP services. They have done a great job of making it super easy to integrate. This is partially helped by the fact that they have less services than Azure and AWS.

Like the other providers, Google offers different tiers:

Standard Storage

The default option and the hot zone for frequently-accessed data — for example, application content for a mobile app.

Nearline Storage

Useful to reduce costs for data that are accessed infrequently (eg. once per month or less). For example, for a monthly report.

Coldline Storage

Similar to Nearline storage. It’s most cost-effective for data accessed once per quarter at most. For example, auditing data needs to be online but accessed exceptionally infrequently.

Archival Storage

This option is the most cost-effective strategy for storing long-term data infrequently accessed. It has a 365-day minimum storage duration. The data remains online and accessible at any point. It’s ideal for data only required as part of a disaster recovery.

Last but not least, what if we want cloud storage to be created in any cloud and on-premises?

For this question, we have the answer.

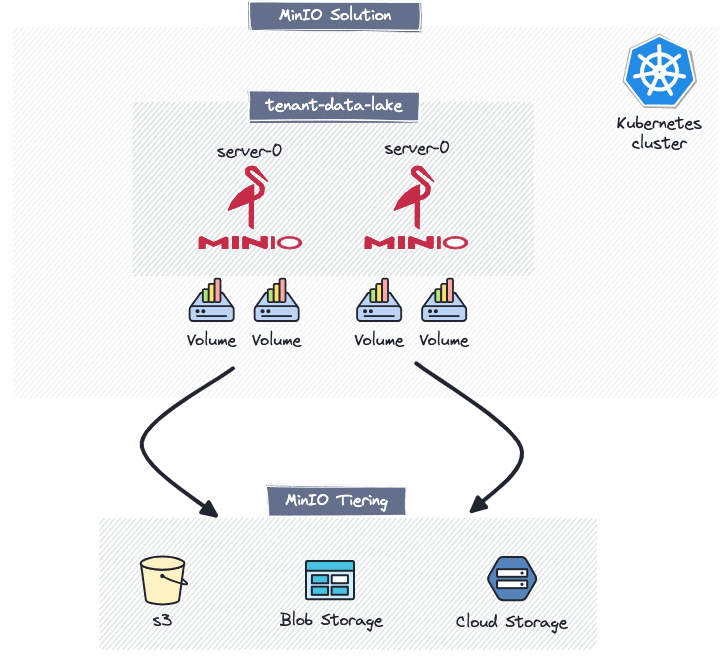

Kubernetes Object Storage: MinIO uses the S3 protocol to deliver a Storage Solution inside a Kubernetes Cluster. It is not a SaaS offering but it can help companies that want to keep data on-premises or build multi-cloud solutions.

To simplify things, let's show MinIO in one picture. Be ready for more blogs to delve into this solution.

Let us explain the diagram, its components and how they work.

Tenant = A logical organization inside MinIO Solution. For example we create a Data Lake tenant with disks and UI console created specifically for this tenant. And we can create multi-tenants as necessary. Think of a tenant like a Storage Account in Azure Blob Storage.

Server = The server where the MinIO logic and programming scripts are stored. This is the main core of the MinIO solution. One tenant can have many servers as desired with each server having disks attached to control.

Disks = As a Kubernetes solution, MinIO uses the Persistent Volumes for storing data. This way the disks are managed by the Kubernetes cluster that hosts the MinIO deployment.

Tiering = MinIO is not a SaaS solution, so what strategies can I employ to have high availability or store the data in a different cloud? It has a Tiering, where data can be sent to other Cloud storage (eg. GCP, Azure, ..). It’s important to state that although the data is backed-up to the Cloud, it cannot be restored directly to MinIO. The application would need to be configured to point to the Cloud storage instead.

In this article, we covered the evolution of storage solutions, which Cloud offerings are in the market and we added a little extra item with our Batman toolbelt: MinIO.

The different Cloud Storage offerings may seem very similar. Which one is best is difficult to answer and has to consider your exact problem, your organization's strategy and the skills you have in the team. This is how you will find the perfect storage solution for your company and get the expected results.

Another point of view is that companies usually have one cloud provider. We foresee that the concept of sharing data between Clouds will gain traction in the upcoming years. However, this is still in early stages and only some companies are adopting a multi-cloud strategy.

If you use S3 as your preferred Cloud storage solution and want to move data in and out of S3, check out the Lenses S3 Connector on our Github repo and stay tuned for more blogs on the subject.

Cheers!