Lenses 5.5 - Self-service streaming data movement, governed by GitOps

New Open-Source Kafka Connectors, and as-code support that integrates with your CI/CD.

In this age of AI, the demand for real-time data integration is greater than ever. For many, these data pipelines should no longer be configured and deployed by centralized teams, but distributed, so that each owner creates their flows independently.

But how to simplify this, whilst practicing good software and data governance?

We are introducing Lenses 5.5. Our latest release focuses on allowing any type of user to integrate real-time data, whilst following your CI/CD practices, and without reliance on centralized teams.

Let’s take a look.

When Kafka is in its infancy in your organization, you can probably avoid having to follow your corporate software delivery practices.

But with Kafka now supporting critical business workloads and requiring access by potentially hundreds of users, bypassing your CI/CD practices is a no-no.

Experienced Lenses users will know that Lenses CLI client can be integrated into your CI/CD pipeline to automate the deployment of Kafka entities, including topics, schemas, connectors, and SQL Processors.

But this didn’t offer the optimal Developer Experience. Users had to know how to create their own YAML file with the as-code specification.

Focusing on Connectors for version 5.5, Lenses now offers the option to view, edit and download the as-code version of your Kafka Connectors, allowing you to easily integrate into your Git repo and trigger a CI/CD deployment.

This brings the simplicity of a UI, designed for users of all skill levels, protected by granular RBAC and auditing, whilst conforming to your software delivery practices.

Stay tuned as we expand as-code capabilities across all Lenses in the coming 5.x and 6.0 releases.

The Kafka Open-Source community is what has made Lenses successful. Since 2016, we have been contributing to Open-Source components, from our Connectors to our Connect Secrets Manager.

The importance of giving the community more choices when it comes to Kafka Connectors is critical to make sure that organizations of all sizes and levels of maturity can easily integrate data with Kafka. Currently, too many connectors are Closed-source or not fully Open-source.

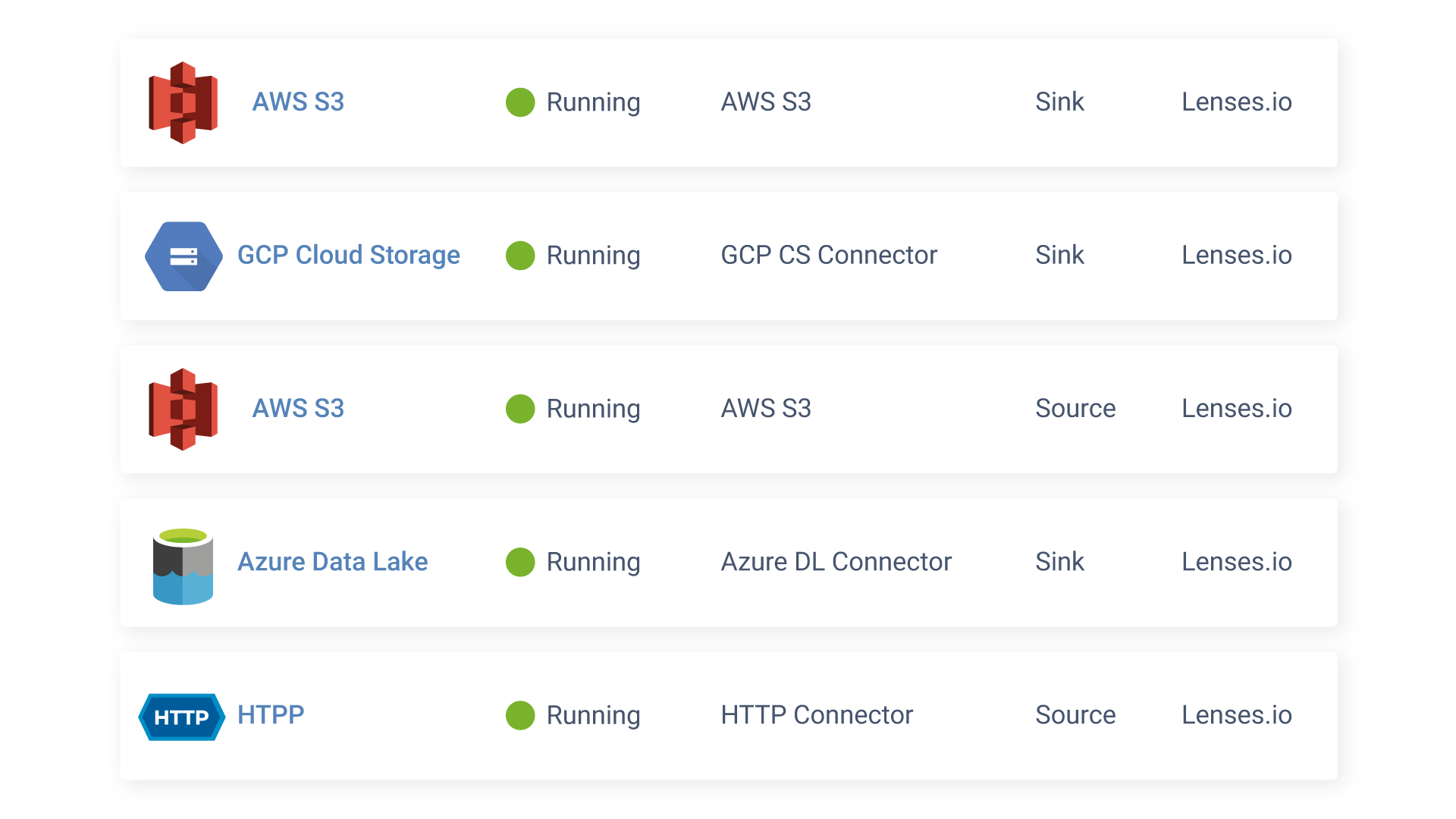

That’s why we continue our Kafka Connector contributions. By popular demand, we are releasing Azure Data Lake Sink, GCP Cloud Storage Sink and HTTP Sink.

Like our Amazon S3 Source and Sink Connectors, we offer optional enterprise support and an enhanced Developer Experience for Lenses customers.

Watch how you can backup Kafka topics to Google Cloud Storage:

Our users have been asking us to help clean up pesky topic offsets from Consumer Groups. Picture this: you've moved on from your "red" topics to "blue" ones, but your Consumer Group is still hanging onto the old red topics. It might not seem like a big deal, but when you're dealing with 1000s of partitions, it can get chaotic.

With Lenses 5.5, you can sort this out easily and safely thanks to RBAC and audit trails.

We've also built a trick to save you time. Now, you can jump straight to the Kafka messages your Consumer Group offsets are pointing to, with just one click. No more jumping around, trying to track down messages.

And ever wondered what "current" and "latest" really mean in Consumer Offsets? They're more about what's coming next than where you are right now. We've added some tooltips to clear that up, and we'll even highlight empty partitions so you can easily address them.

Ever needed to resend a Kafka message? Maybe you’re testing a change in your data pipeline, you’ve fixed a bug and want to resend the message that broke it. With Lenses, you can now do this with the click of a button, from both Explore and SQL Studio.

Lenses data production alerts notify you when the throughput of a data producer falls behind what is expected, potentially signifying a problem.

This feature has now been extended to offer you this ability when “at least one” event has been generated. This is particularly useful when you want to be alerted if one or more events have been placed in a Dead Letter Queue (DLQ).

We hope you enjoy this step into as-code practices for your streaming data, new connectors, a series of topic clean-up shortcuts, and more UI-driven Kafka experiences.

_______

Read the 5.5 upgrade notes