Why choose the leading developer

experience for Kafka?

Three benefits of using Lenses.

Open up your data

platform to more users

As new data sources explode into being, developers need the freedom to explore and troubleshoot apps and data pipelines, limited only by the rules of your business:

- Search a universe of data across apps, pipelines, and environments.

- Catalog and share all your Kafka assets from one place.

- Integrate data streams with any object store, data lake, or repository.

Prioritize data protection

and security

Never compromise on regulatory compliance, or risk a security breach. The Lenses developer experience allows you to

- Set rules for sharing and collaboration.

- Mask sensitive fields and metadata.

- Apply role-based access across Kafka environments.

Standardize coding

processes and practices

- Build streaming pipelines and processors using simple SQL syntax.

- View and deploy your event-driven applications as-configuration, for good software governance.

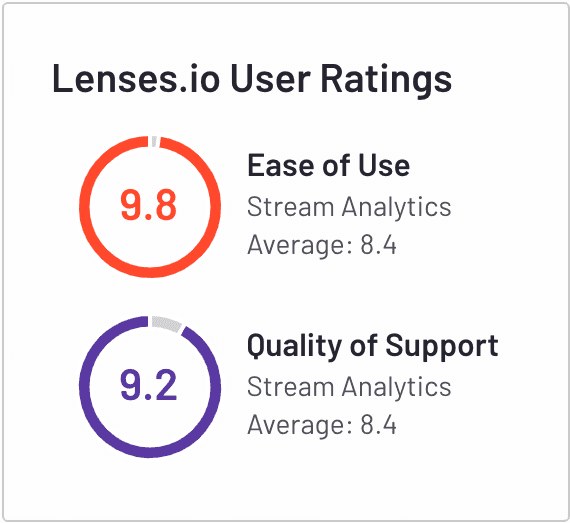

Data Platform excellence

100% Satisfaction

Lenses is the highest rated product for real-time stream analytics according to independent third party reviews.

Read reviews